Result In this article we will discuss some of the hardware requirements necessary to run LLaMA and Llama-2 locally. Result Llama 2 is an auto-regressive language model built on the transformer architecture Llama 2 functions by taking a sequence of words as input and predicting. Result iakashpaul commented Jul 26 2023 Llama2 7B-Chat on RTX 2070S with bitsandbytes FP4 Ryzen 5 3600 32GB RAM. The performance of an Llama-2 model depends heavily on the hardware its running on. Result Llama 2 The next generation of our open source large language model available for free for research and commercial use..

This release includes model weights and starting code for pre-trained and fine-tuned Llama language models ranging from 7B to 70B parameters. Llama 2 is a new technology that carries potential risks with use Testing conducted to date has not and could not cover all scenariosnIn order to. Update Dec 14 2023 We recently released a series of Llama 2 demo apps here These apps show how to run Llama locally in the cloud or on-prem how. We would like to show you a description here but the site wont allow us. Open source free for research and commercial use Were unlocking the power of these large language models..

Explore AI4Chats comprehensive comparison of AI models - ChatGPT Bard Llama 2 and Claude. Comparison for CRE ChatGPT vs Bard vs LLaMa Chat So which AI Chat is right for commercial real estate professionals. A comparison of the latest conversational AI models from OpenAI and Anthropic ChatGPT 4 LLama Bard and Claude The table outlines their key differences in. Chat GPT Bard and LLaMa are multimodal large language models LLMs designed for conversational AI applications. Are you fascinated by how artificial intelligence can write natural language text This article compares two of the most impressive..

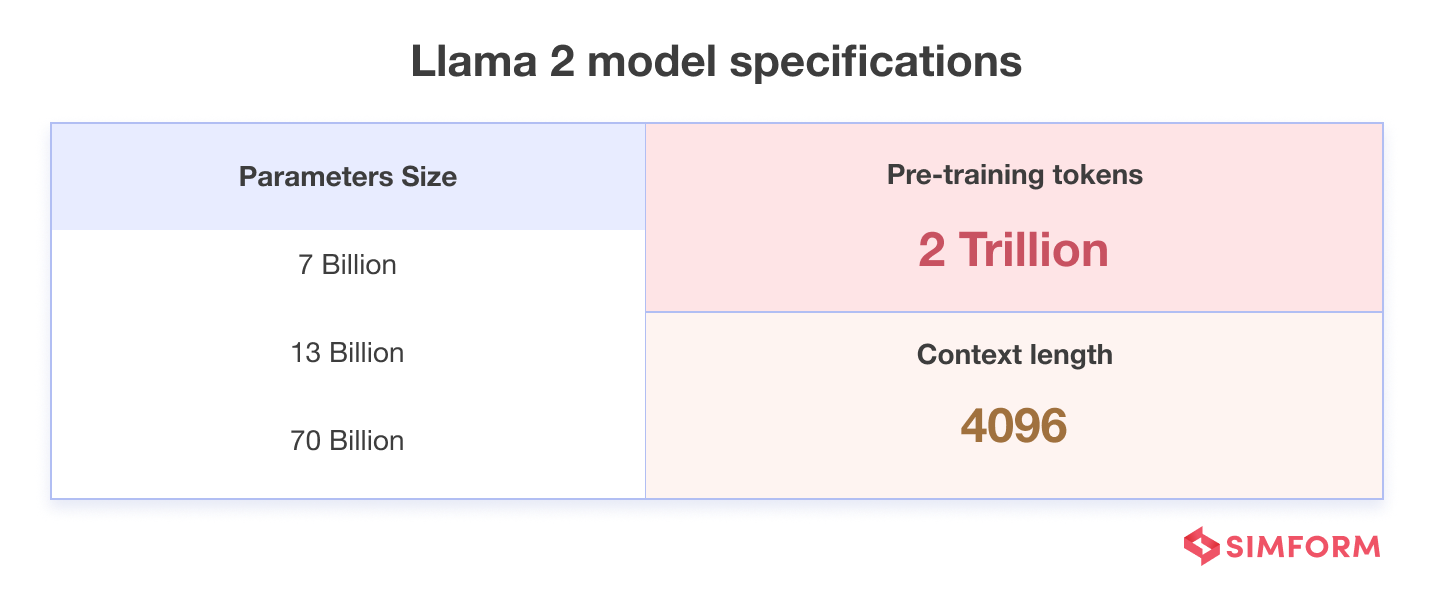

Llama-2 much like other AI models is built on a classic Transformer Architecture To make the 2000000000000 tokens and internal weights easier to handle Meta. Llama 2 is a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Llama 2 is a family of pre-trained and fine-tuned large language models LLMs released by Meta AI in..

Komentar